Replicability Prediction

Replicability matters, but judging it has traditionally been more art than science. ReviewerZero's Replicability Prediction transforms curated criteria into a transparent, quantified assessment that helps you strengthen your research before submission.

Replicability Assessment

Replicability Assessment

Why Replicability Prediction?

The replication crisis has highlighted serious concerns across scientific disciplines:

- Many published findings fail to replicate

- Reviewers struggle to consistently assess replicability

- Authors may not know which factors most affect reproducibility

- Journals increasingly require transparency and rigor

Our system provides an objective, criteria-based assessment that helps identify weaknesses before they become problems.

What We Evaluate

The system assesses multiple factors that research has shown to predict replicability:

| Factor | Description |

|---|---|

| Sample Size | Is the sample adequate for the claims being made? |

| Data Transparency | Are data, code, and materials available? |

| Methodological Rigor | Are methods described in sufficient detail? |

| Statistical Practices | Are appropriate analyses used and reported correctly? |

| Pre-registration | Was the study pre-registered? |

| Effect Size Reporting | Are effect sizes reported with confidence intervals? |

| Power Analysis | Is statistical power addressed? |

How It Works

Criteria-Based Assessment

Each factor is evaluated against established best practices:

- Text analysis - The system scans your manuscript for relevant statements

- Evidence extraction - Specific passages are identified that support or undermine each criterion

- Scoring - Each factor receives a score based on available evidence

- Aggregation - Individual scores combine into an overall prediction

Transparent Scoring

Unlike black-box predictions, our system shows:

- Overall probability - The predicted likelihood of successful replication

- Factor breakdown - How each criterion contributes to the score

- Evidence citations - Specific passages from your manuscript

- Improvement areas - Clear recommendations for strengthening your research

Understanding Results

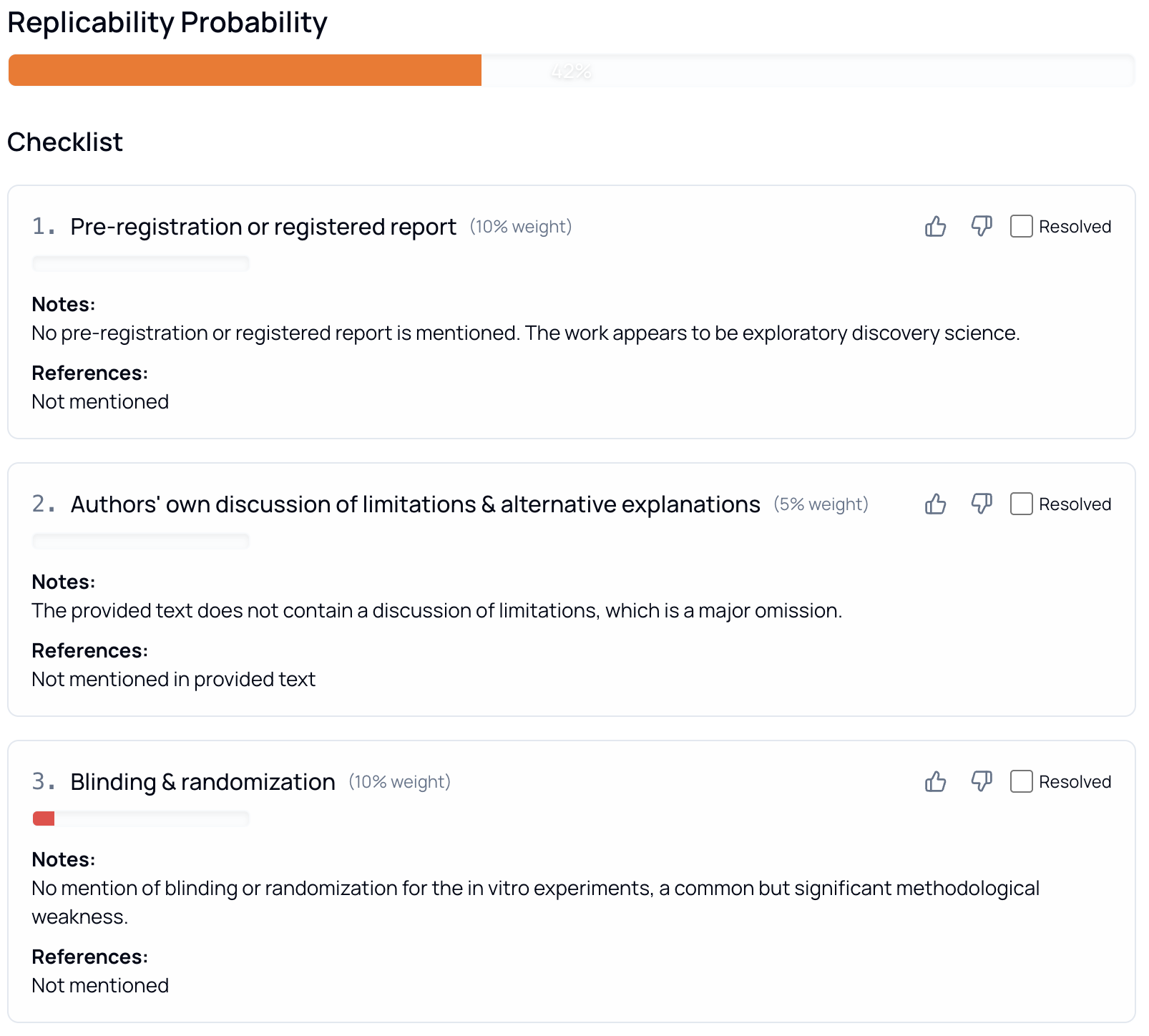

Replicability Score

The overall score represents the predicted probability of successful replication:

| Score Range | Interpretation |

|---|---|

| 80-100% | Strong replicability indicators |

| 60-79% | Good, with room for improvement |

| 40-59% | Moderate concerns - review recommendations |

| 0-39% | Significant concerns - address before submission |

Factor Details

For each evaluated factor, you'll see:

- Status - Whether the criterion is met, partially met, or not met

- Evidence - Quotes from your manuscript supporting the assessment

- Recommendations - Specific actions to improve this factor

- Weight - How much this factor affects the overall score

Interactive Checklist

The system provides an interactive checklist where you can:

- Track which criteria you've addressed

- Mark items as complete as you revise

- See your score update in real-time

- Export the checklist for your records

Common Issues

Sample Size Concerns

- Sample too small for claimed effects

- No power analysis or justification provided

- Subgroup analyses with inadequate n

Transparency Gaps

- Data not shared or availability unclear

- Code/materials not provided

- Pre-registration not mentioned

Methodological Issues

- Insufficient detail to replicate procedures

- Missing important control conditions

- Unclear exclusion criteria

Statistical Practices

- P-values without effect sizes

- Multiple comparisons without correction

- Selective reporting indicators

Improving Your Score

Quick Wins

- Add a data availability statement

- Report effect sizes with confidence intervals

- Include power analysis or sample size justification

- Clarify your pre-registration status

Deeper Improvements

- Expand your methods section with replication-enabling detail

- Share code and materials in a repository

- Address alternative explanations

- Report all conducted analyses

Best Practices

Before Running Assessment

- Ensure your manuscript is near-final

- Include all relevant methods details

- Add data/code availability statements

Interpreting Results

- Use the assessment as a guide, not a guarantee

- Consider discipline-specific norms

- Prioritize factors most relevant to your research type

- Discuss results with co-authors

After Assessment

- Address high-impact factors first

- Re-run the assessment after revisions

- Document your improvements

- Consider reviewer perspective

Limitations

What This Is Not

- A guarantee - High scores don't guarantee replication

- Field-specific - Some criteria may be more relevant in certain disciplines

- Complete - Not all replicability factors can be assessed from text alone

Context Matters

Some legitimate research may score lower due to:

- Exploratory or hypothesis-generating studies

- Resource constraints that limit sample sizes

- Novel methods without established practices

- Confidential data that cannot be shared

Related Resources

- AI Review - Structured peer-review feedback

- Statistical Checks - Verify reported statistics

- Guideline Compliance - Meet journal requirements