Can AI peer review be effective? Let's have a chat

Every academic knows the feeling: waiting months for mediocre feedback while reviewers juggle impossible workloads. Here's how ReviewerZero's purpose-built AI delivers structured critique, replicability predictions, and journal recommendations.

According to a 2018 global survey, 42% of scholars feel overcommitted, 70% decline review invitations outside their expertise, and just 10% of reviewers handle almost half of all reviews. This imbalance strains the system to a breaking point. Something has to give, and many are asking: Can Artificial Intelligence lend a hand?

At ReviewerZero AI, we've been thinking about this problem for a while. We've been building an automated review system that, according to our studies (1,2), scientists prefer significantly more than human reviewers, traditional LLMs, and other automated peer review systems. In this article, we will talk about our new suite of tools: an automated review system, replicability assessment, journal recommendation, and journal guideline checks.

Too Many Papers, Too Little Time

The explosion of research output in recent years is a double-edged sword. On one hand, science is advancing rapidly; on the other, no one has time to vet all these papers.

Overstretched reviewers may provide only superficial feedback or delay their reports for months. Indeed, reviewer fatigue has become a recognized issue in academia. Surveys show that many academics are simply "too busy" to review and feel burnt out by the torrent of requests. This leads to delays in publishing and, worse, uneven quality in peer review—some papers get rigorous scrutiny, while others receive perfunctory, low-effort reviews.

From the author's perspective, the process can seem like a lottery. You might get an expert who gives constructive criticism, or you might get a cranky "Reviewer #2" whose harsh (even aggressive) comments add little value. In fact, unprofessional review comments are common enough to have spawned internet memes and Twitter accounts. An analysis found that the most frequent type of shared review comment was "aggressive"—often harshly negative and even impolite.

What Does Good Peer Review Look Like?

Before exploring AI solutions, it's worth asking: What should a proper peer review accomplish? At its core, peer review is about quality control and constructive critique. A diligent reviewer wears multiple hats—a fact-checker, a methodologist, a mentor, and an ethics watchdog. Here are some fundamentals of an effective peer review:

Soundness of Methods & Analysis: Verify that the research methods are appropriate and applied correctly. This includes checking that the statistical analyses match the data and whether the results support the conclusions. Catching errors here is crucial to avoid false findings being published.

Novelty and Significance: Evaluate the work's originality and importance in context. Does it advance the field or just rehash prior studies? This requires knowledge of the literature—a reviewer should compare the manuscript's claims against existing knowledge. Is the hypothesis truly new and are the results impactful? Assessing novelty has always been a human strength, but AI can assist by rapidly cross-referencing vast literature.

Clarity and Replicability: A paper should be clear enough that other scientists can understand and attempt to replicate the work. That means checking if the manuscript includes enough detail (data, code, protocols) and is written clearly. The "replicability crisis" in science has taught us that many published findings don't hold up when repeated.

Appropriate Citations & Context: Ensure the authors acknowledge prior work and provide proper attribution. Are there missing references to related studies? Conversely, are they claiming too much novelty without citing key papers? A reviewer should catch plagiarism or uncited ideas, whether intentional or accidental.

Constructive Criticism: Perhaps most importantly, a peer review should help authors improve their work. Even if a paper has flaws, a quality review will specifically identify the problems and ideally suggest ways to address them. Tone matters, too—critiques should be professional and focused on the science, not personal attacks.

Integrity Checks: Finally, a reviewer must be alert to serious research integrity issues. This goes beyond normal scientific debate into misconduct or fatal flaws. Examples include: plagiarism, data fabrication, unethical experiments, image manipulation, undisclosed conflicts of interest, etc.

In practice, delivering on all these fronts is hard. Human reviewers often excel in some areas only. One person might be great at spotting methodological flaws but might miss a subtle plagiarism issue. Another might be very supportive (or too critical). The variability is high—and as studies show, the quality of human reviews ranges from outstanding to very poor.

Early AI Attempts: Why They Fell Short

The idea of using algorithms to ease the peer review burden isn't entirely new. Before the era of large language models, journals used tools like plagiarism checkers or statistics checkers to flag obvious issues. Those tools are useful but limited—they handle narrow tasks and don't actually understand the content.

With the advent of powerful AI models, the dream of an AI that can "read" a manuscript and produce an informed review started to look feasible. So, how has it gone so far?

The Simplest Approach: Prompting a General AI. Suppose you take a paper, feed its text to an AI like ChatGPT, and prompt: Please review this scientific manuscript for me. Early adopters tried this, and it does generate a response—often politely phrased, summarizing the paper, and giving some generic feedback. However, we discovered in our studies (1,2) that these "naïve AI reviews" tended to be shallow and over-enthusiastic.

A standard language model, without special training, is likely to say The paper is well-written and presents interesting results and only gently tap on a couple of obvious points. It over-praises and under-critiques. Why? Because by default, AI models are trained to be helpful and inoffensive. They also may not reliably catch technical flaws or realize when a claim is unsupported.

Building Smarter AI Reviewers: Purpose-Built Solutions

At ReviewerZero, we've developed a purpose-built AI system that addresses these challenges head-on. This isn't a generic AI stuck onto the problem; it's a tailored system with peer review expertise baked in. Here's what makes our approach special:

Domain-Trained Models: Our AI models have been fine-tuned on scholarly content and actual peer reviews, so they understand the tone and criteria of academic feedback. They are equipped to be appropriately critical (not just polite), and they "know" about research methodologies, common analysis techniques, and domain-specific terminology far better than a general chatbot.

Multi-Modal Analysis: Our system can handle more than just text. It parses figures, equations, and references. It can cross-check citations with external databases to see if the authors missed important prior work or if any referenced study has been retracted. If a manuscript claims "to our knowledge, this is the first study to…", the AI can do a quick literature scan to gauge if that claim holds water.

Privacy and Security: A major concern with using external AI services for sensitive manuscripts is confidentiality. ReviewerZero's models run entirely on secure servers, and we do not use your manuscript data to train any models—period. Any paper you upload stays private.

Three AI-Powered Tools That Work Today

Most excitingly, our latest update introduces a trio of AI-generated reports

1. Structured Review Report

Instead of a wall of text that might say "the methodology needs work," our AI organizes feedback into clear, actionable sections:

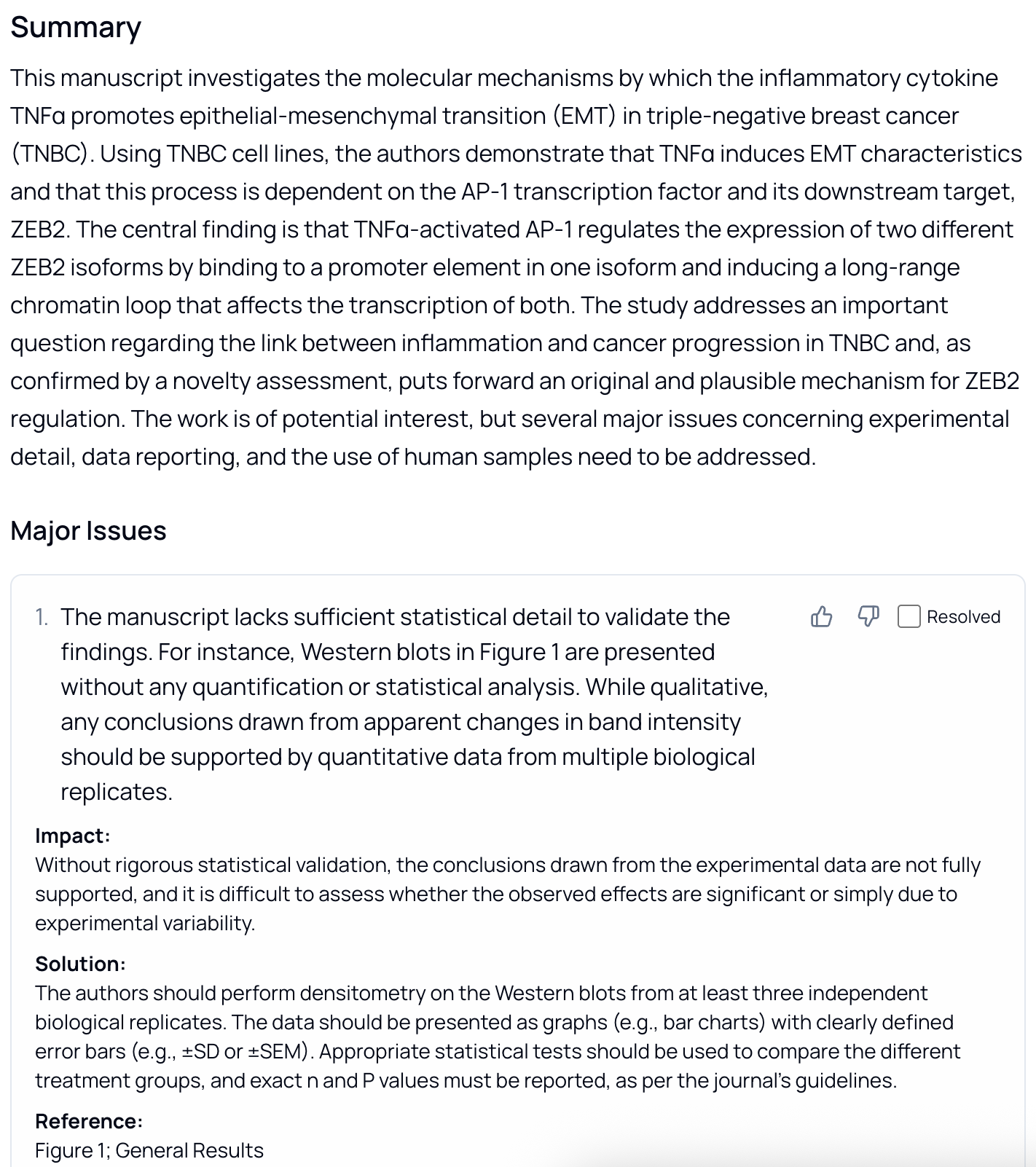

• Major Issues — deal-breakers that must be fixed before publication, like fundamental flaws in experimental design or unsupported conclusions.

• Minor Issues — important but often cosmetic improvements that polish the work, such as unclear figure legends or minor statistical reporting issues.

• Additional Comments — helpful context and suggestions that could strengthen the paper, including recommendations for additional analyses or clarifications.

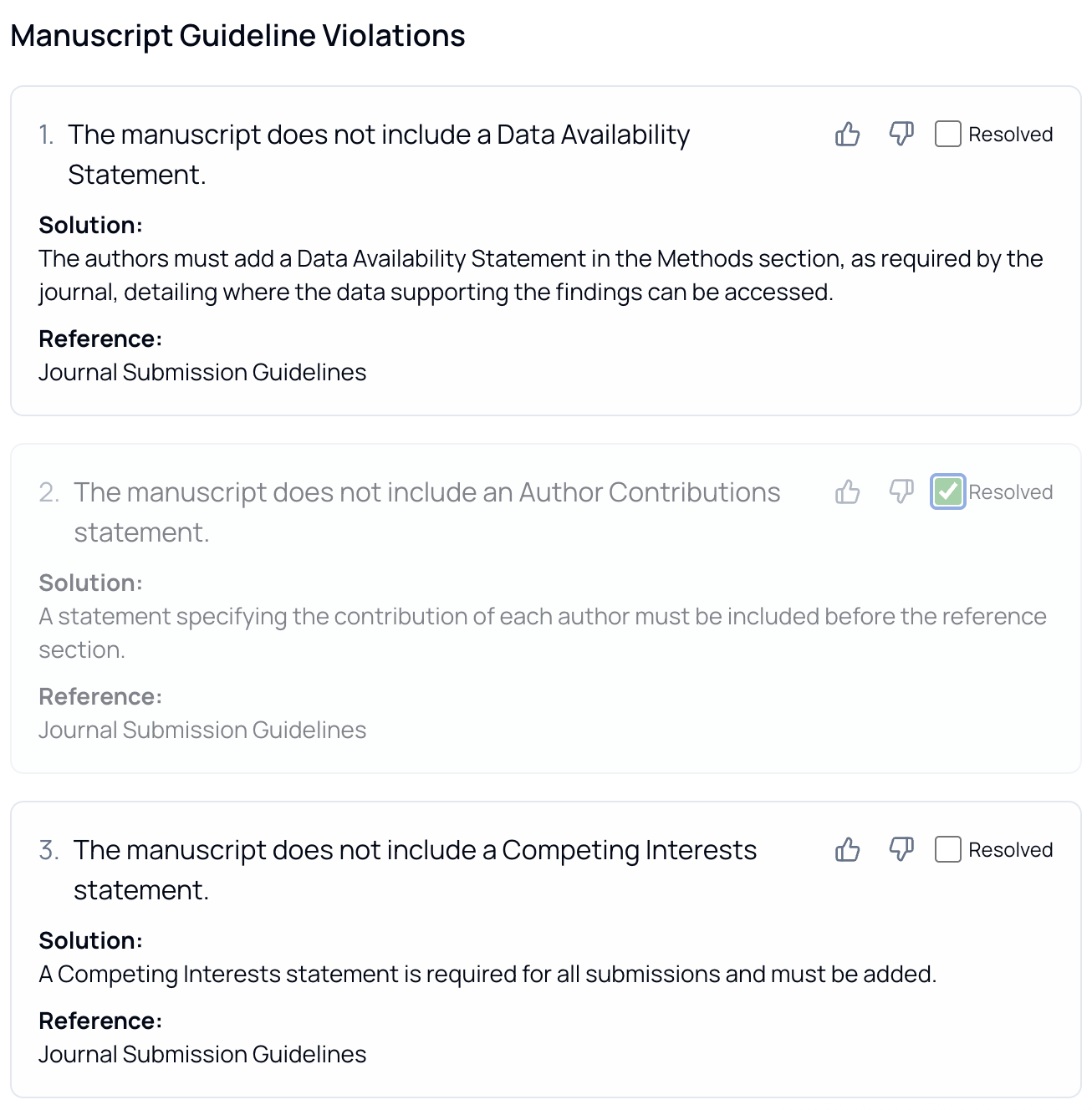

• Guideline Violations — missing ethics statements, absent conflict of interest disclosures, or other policy oversights that journals require.

Each comment comes with its own interactive card where you can give a quick thumbs-up, thumbs-down, or mark it resolved. There's no more guessing about which reviewer comments you've addressed. Complete them all and you'll get a burst of on-screen confetti—a small celebration that traditional peer review rarely provides!

For authors, this is immensely useful feedback. It's like having a mentor-reviewer go through your paper with a fine-toothed comb before real reviewers do, giving you a chance to fix issues and bolster your submission. This proactive approach can dramatically improve your chances of acceptance and reduce the dreaded revise-and-resubmit cycle.

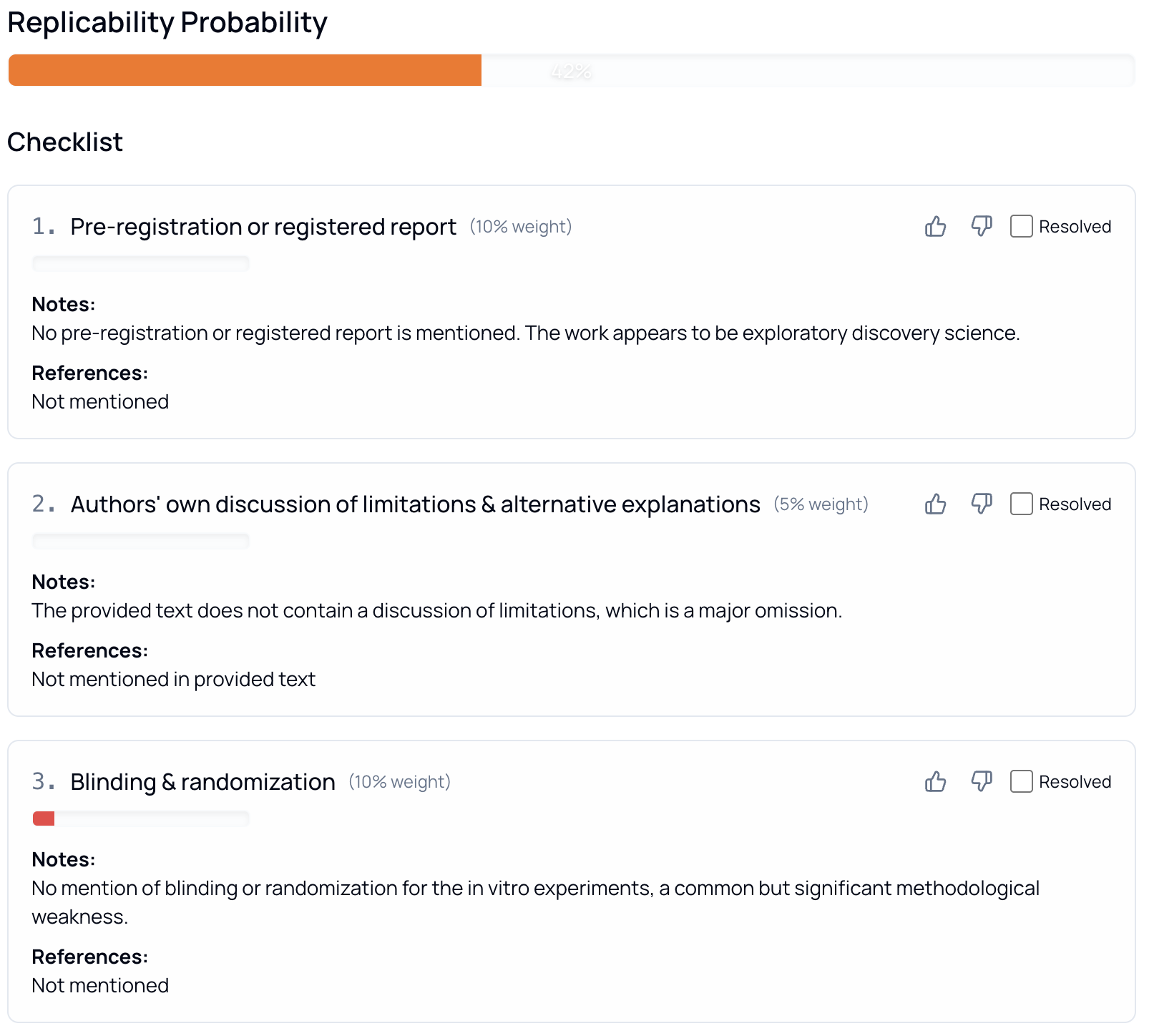

2. Replicability Prediction

Replicability matters, but judging it has always been more art than science. Our Replicability Assessment transforms a set of curated criteria into a transparent, quantified prediction. The system evaluates factors like sample size adequacy, data transparency, methodological rigor, and statistical practices, then computes a probability that the findings will hold up when others try to replicate them.

3. Journal Recommendations

Choosing the wrong journal can waste both your time and the journal's time. Our Journal Recommendation takes the guesswork out of this critical decision. The AI analyzes your manuscript's content, methodology, and scope, then suggests outlets that are good choices.

Looking Ahead: The Future of Peer Review

Together with the integrity checks we already have, such as image duplication, statistical inconsistencies, and citation issues, we are building what we believe is what peer review should have always been.

Ready to see for yourself how AI can transform your research workflow? Join our beta and experience the future of peer review today.

Interested in learning more about how AI is transforming research integrity? Check out our other posts on statistical integrity and explore all our capabilities on our features page.